In this article, you will learn how to build a video streaming app with Flutter and Mux – see how Mux helps to handle all the complex steps of the video streaming process and how to integrate it with Flutter.

This article is written by Souvik Biswas

Video streaming is an important aspect of various kinds of applications, ranging from social media apps like TikTok and Instagram (Reels), which engage users with short video clips, to proprietary video-sharing applications similar to YouTube, which allow users to share medium-length to long videos.

Though a video streaming platform of some kind is an awesome addition to some apps and a spotlight feature in a few apps, managing the infrastructure and handling all the aspects of streaming is a really cumbersome job.

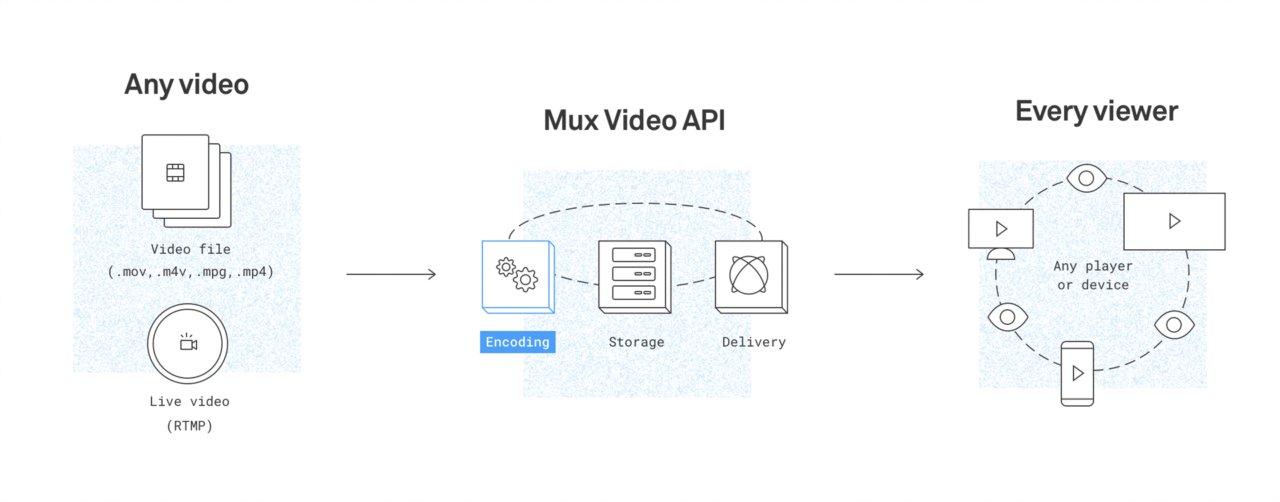

Some of the main steps involved in video streaming are:

- Encoding and decoding the video

- Managing video database storage

- Support for multiple video formats

- Distribution

So, let’s get started, but first, let us know what’s your relationship with CI/CD tools?

Getting started with building a video streaming app with Flutter and Mux

Mux is an API-based video streaming service that handles the encoding and decoding of a video, distributing it to users. On top of that, it also provides a lot of additional features, like subtitles, thumbnails, GIFs, watermarking, etc. It has a data tracking API, which helps to monitor the video streaming performance as well.

Though Mux helps in streaming the video, it doesn’t provide any storage. So, you have to provide the URL where you have stored the video.

To get started using Mux, create an account here.

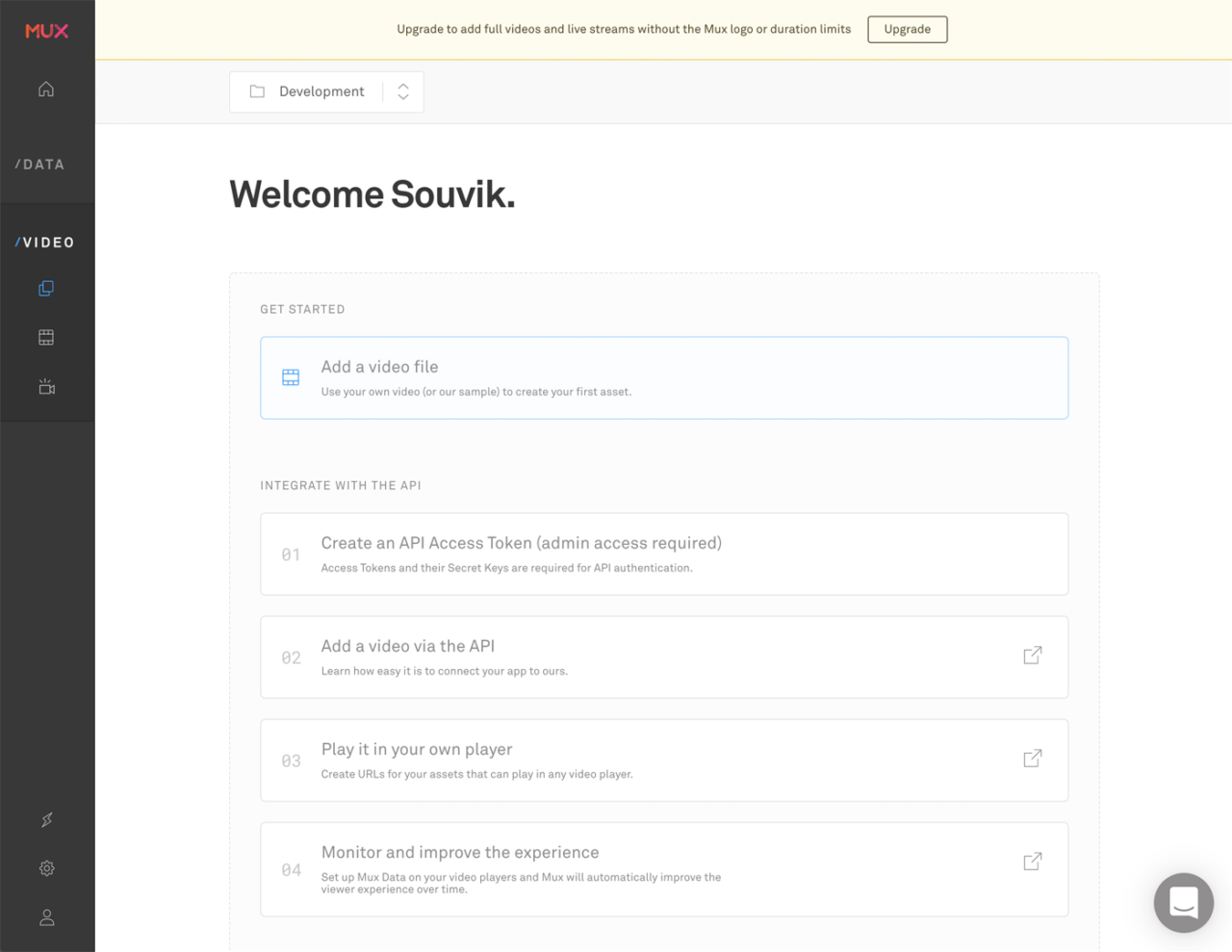

When you log in to Mux, it will take you to the Dashboard.

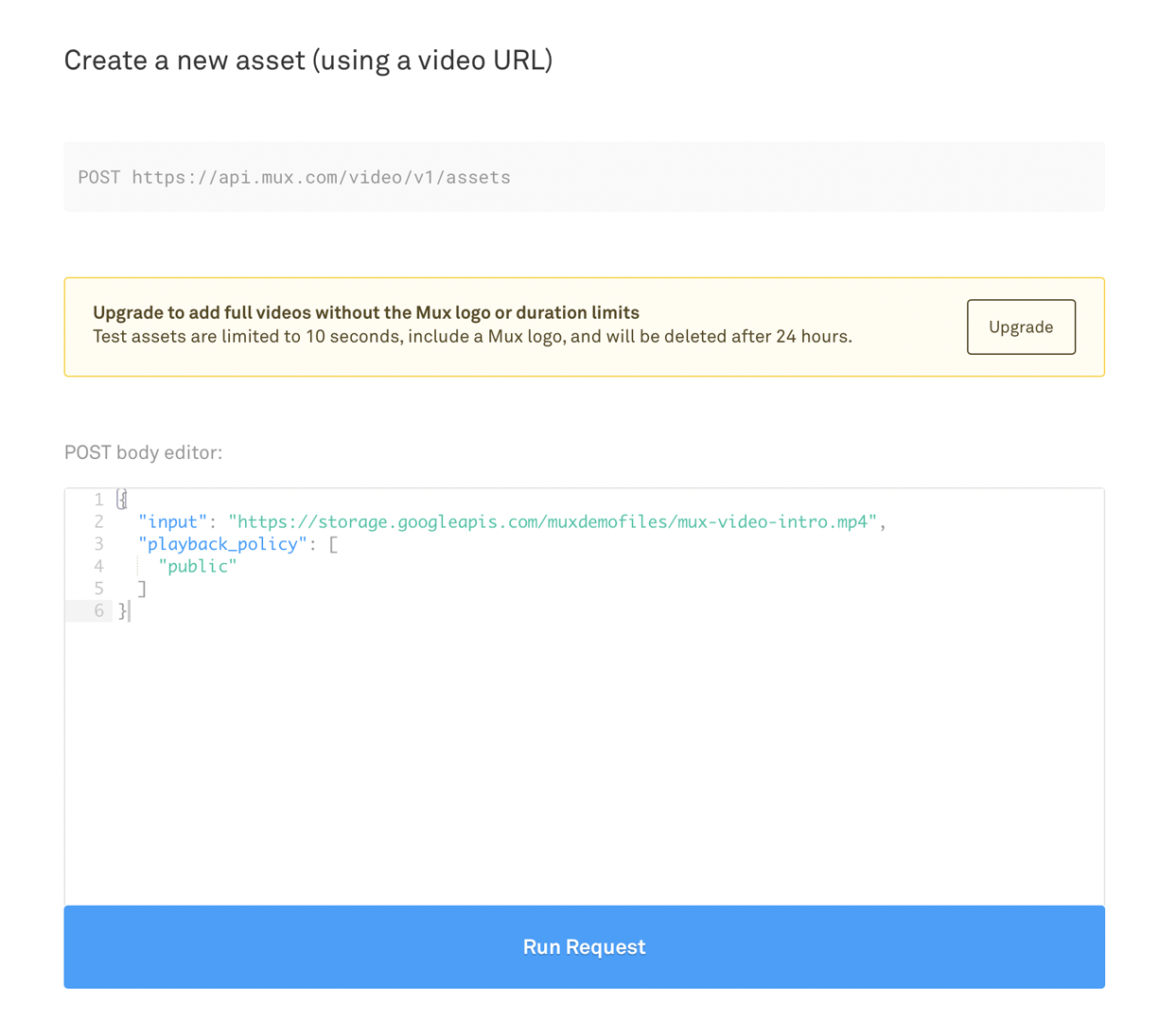

You can try adding a video by going to the Add a video file section and running the POST request with the URL of the video file.

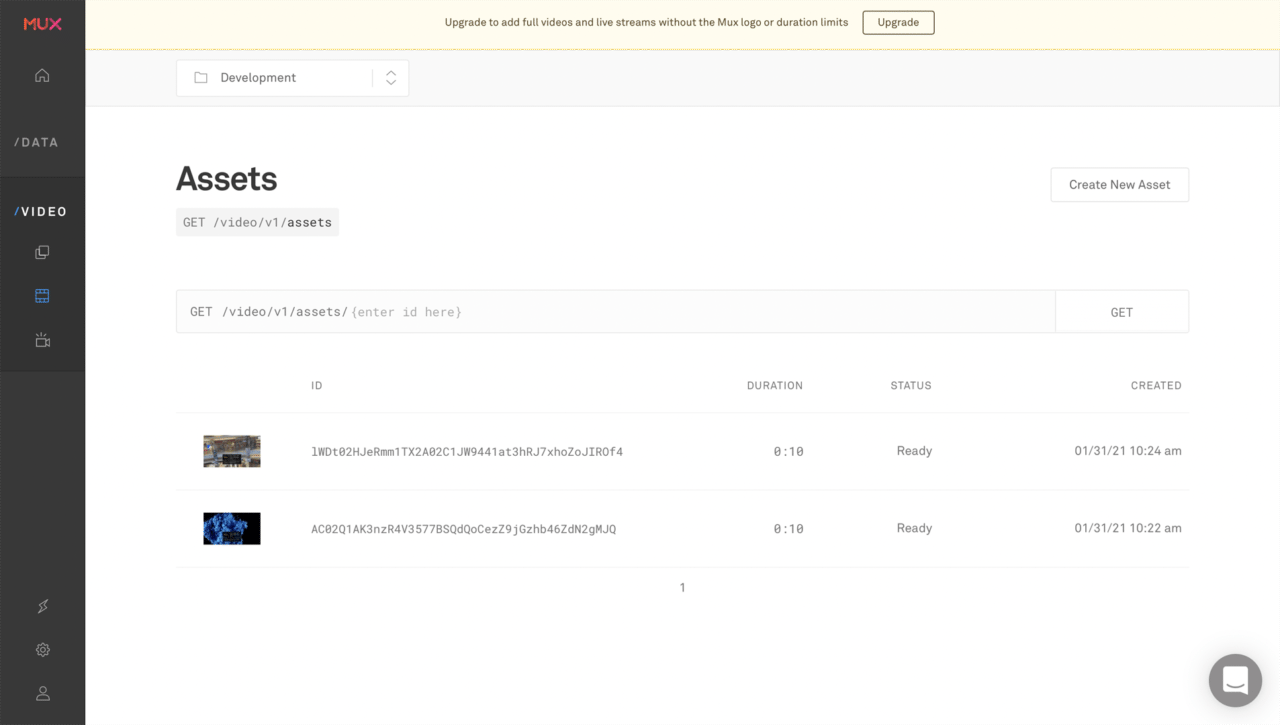

The videos are stored in the Assets section of Mux.

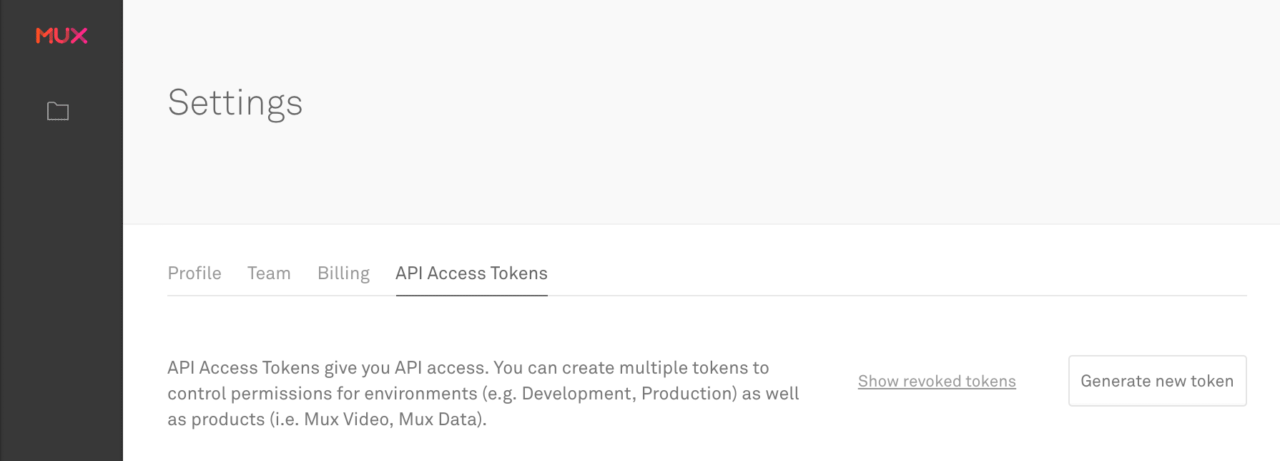

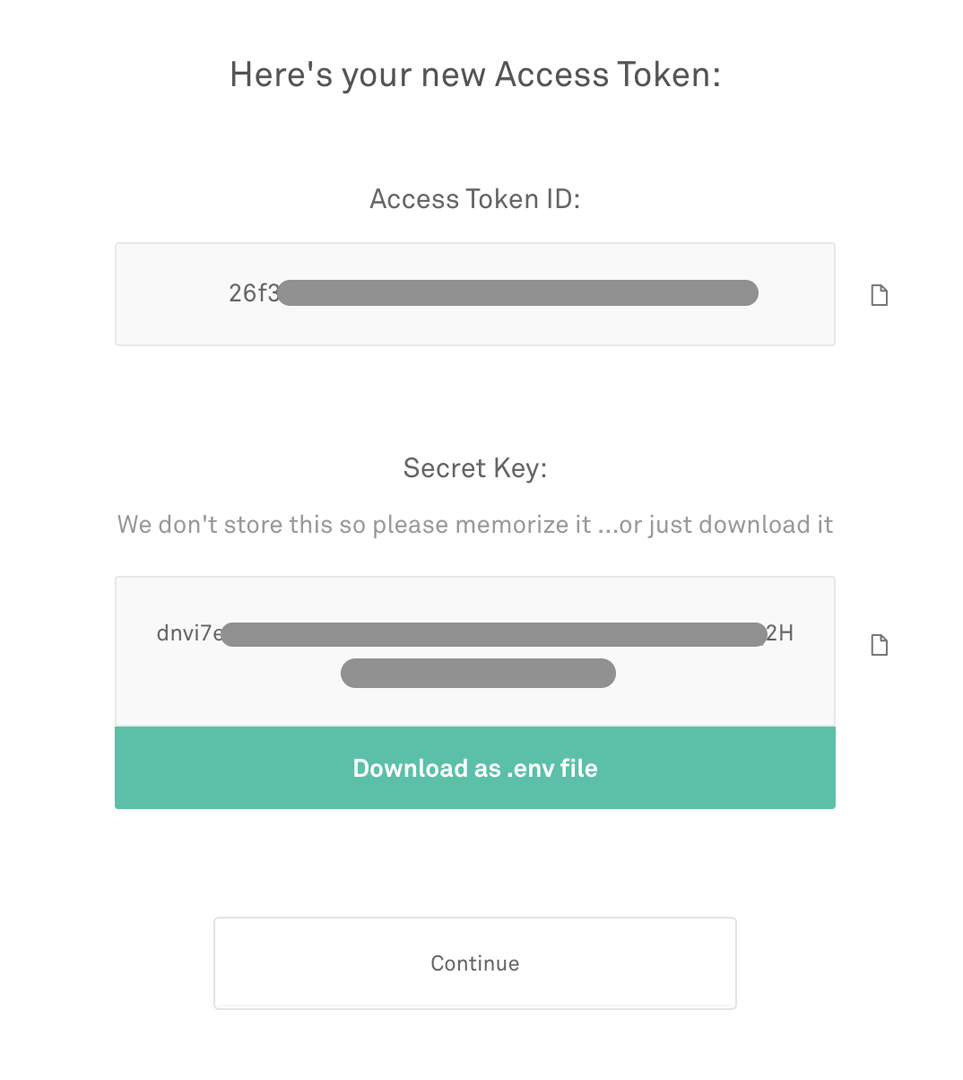

In order to use the Mux API, you will need to generate an API Access Token from here.

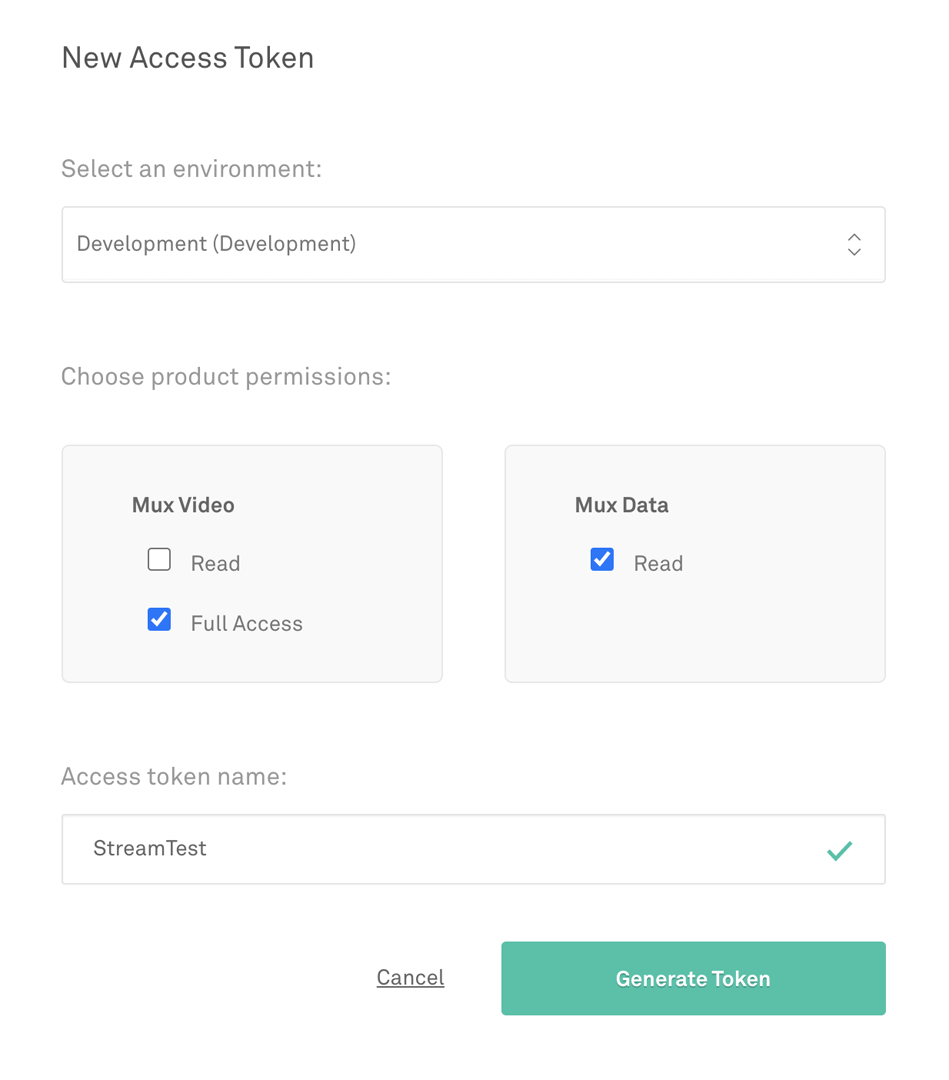

Fill in the details properly, and click on Generate Token.

This will generate a Token ID and Token Secret for API authentication.

Copy these files and store them in a private folder. (Don’t commit these keys to the version control system – add them to .gitignore.)

Now, let’s dive into the main part, integrating the Mux API with a Flutter app.

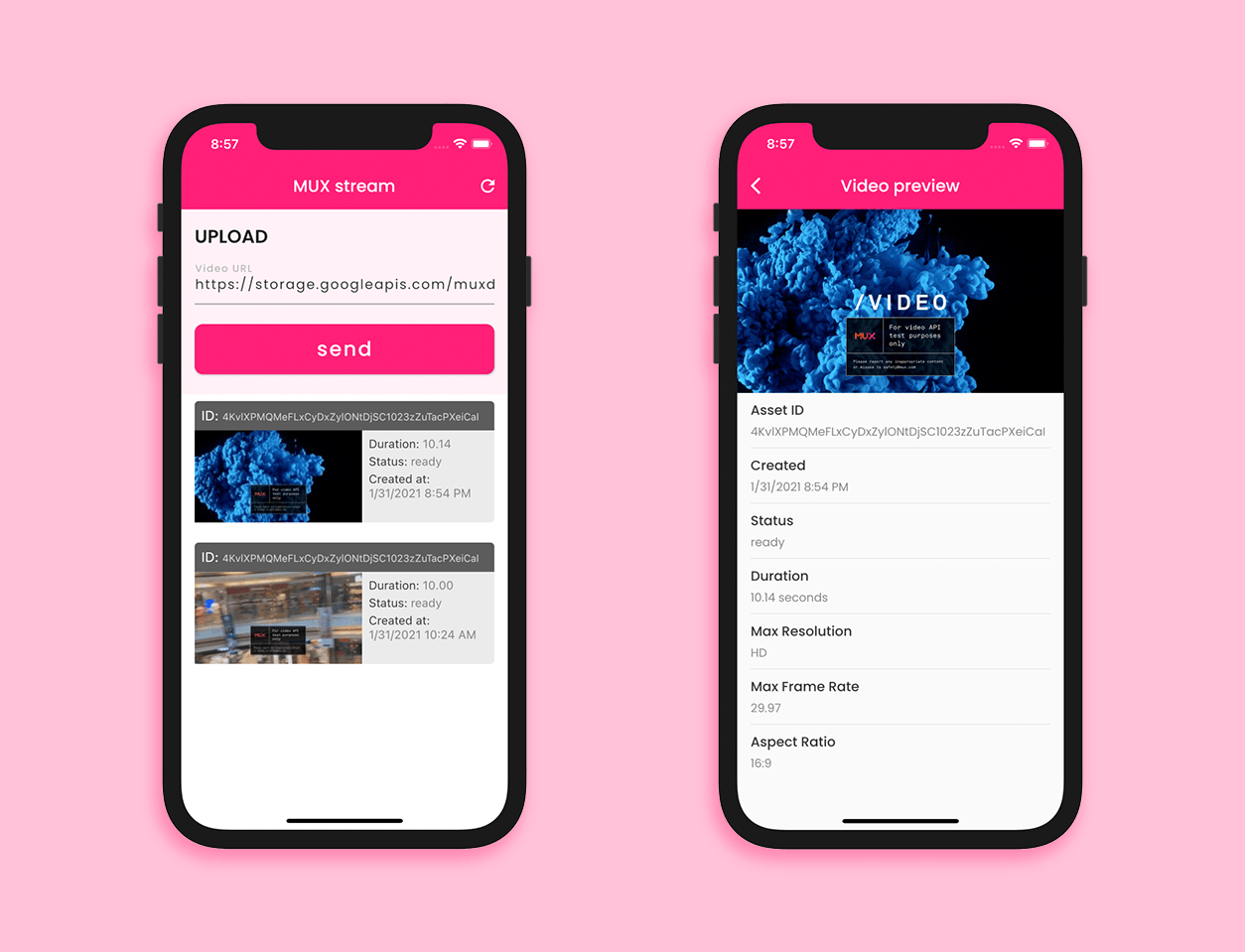

App overview

We will build a simple Flutter app containing just two pages:

HomePagePreviewPage

Plugins

First of all, let’s add the plugins that we need for building this app to the pubspec.yaml file.

- dio – for making HTTP POST and GET requests

- video_player – for previewing the video to be streamed

- intl – to format

DateTimeobjects

Building the backend

It’s always better to design the backend of an app first and then structure the UI based on the backend.

We need to build a simple API server to send the API requests to MUX. Though sending GET and POST requests directly from the client (mobile device) to the MUX server is convenient, it leaves massive security hole which may expose your MUX credentials to anyone who uses the app.

You can get a basic API server code written in node.js containing some functions that we require for our implementation here.

Now, inside the root folder you have to create a file called .env with the following content:

MUX_TOKEN_ID="<mux_token_id>"

MUX_TOKEN_SECRET="<mux_token_secret>"

Store your Token ID and Token Secret in this file and add it to .gitignore. The API server will automatically pick up the credentials from here.

For testing your can just run it on your local machine using:

node main.js

This will run the server on port 3000, and you can access it from the client app using the URL:

http://localhost:3000

The backend of this app will deal with the API calls (i.e., GET and POST requests), so this will mainly consist of two parts:

- Mux client

- Model classes

Mux client

We will start building the client class by initializing the Dio. Create a MUXClient class inside a new file called mux_client.dart.

Now, create a Dio object:

class MUXClient {

Dio _dio = Dio();

}

Add a method initializeDio():

class MUXClient {

Dio _dio = Dio();

initializeDio() {

// initialize the dio here

}

}

Here, we will configure the Dio but we don’t need to perform any kind of authorization from the client as it is already being done from our API server.

Create a string.dart file containing some constants that we require afterwards:

// API for sending videos

const muxBaseUrl = 'https://api.mux.com';

// API server running on localhost

const muxServerUrl = 'http://localhost:3000';

// API for generating thumbnails of a video

const muxImageBaseUrl = 'https://image.mux.com';

// API for streaming a video

const muxStreamBaseUrl = 'https://stream.mux.com';

// Received video file format

const videoExtension = 'm3u8';

// Thumbnail file type and size

const imageTypeSize = 'thumbnail.jpg?time=5&width=200';

// Content Type used in API calls

const contentType = 'application/json';

// Test video url provided by MUX

const demoVideoUrl = 'https://storage.googleapis.com/muxdemofiles/mux-video-intro.mp4';

The initializeDio() method will look like this:

initializeDio() {

BaseOptions options = BaseOptions(

baseUrl: muxServerUrl,

connectTimeout: 8000,

receiveTimeout: 5000,

headers: {

"Content-Type": contentType, // application/json

},

);

_dio = Dio(options);

}

Next, we need a method for storing the video to Mux by sending a POST request to the endpoint /assets. For this, create a method called storeVideo(), and pass the video URL to it as a parameter. This method will return the VideoData after processing on Mux is complete.

Future<VideoData> storeVideo({String videoUrl}) async {

Response response;

try {

response = await _dio.post(

"/assets",

data: {

"videoUrl": videoUrl,

},

);

} catch (e) {

print('Error starting build: $e');

throw Exception('Failed to store video on MUX');

}

if (response.statusCode == 200) {

VideoData videoData = VideoData.fromJson(response.data);

String status = videoData.data.status;

while (status == 'preparing') {

print('processing...');

await Future.delayed(Duration(seconds: 1));

videoData = await checkPostStatus(videoId: videoData.data.id);

status = videoData.data.status;

}

return videoData;

}

return null;

}

Here, I have used the checkPostStatus() method to track the status of the video that is being processed until it is ready. A GET request is sent to the endpoint /asset with the video ID as the query parameter to get a VideoData object containing the status information.

Future<VideoData> checkPostStatus({String videoId}) async {

try {

Response response = await _dio.get(

"/asset",

queryParameters: {

'videoId': videoId,

},

);

if (response.statusCode == 200) {

VideoData videoData = VideoData.fromJson(response.data);

return videoData;

}

} catch (e) {

print('Error starting build: $e');

throw Exception('Failed to check status');

}

return null;

}

We will define one more method, getAssetList(), for retrieving a list of all the videos that are stored on Mux by sending a GET request to the endpoint /assets. It will return an AssetData object.

Future<AssetData> getAssetList() async {

try {

Response response = await _dio.get(

"/assets",

);

if (response.statusCode == 200) {

AssetData assetData = AssetData.fromJson(response.data);

return assetData;

}

} catch (e) {

print('Error starting build: $e');

throw Exception('Failed to retrieve videos from MUX');

}

return null;

}

You may be wondering what the VideoData and AssetData classes are – they are just model classes for easily parsing the JSON data returned by the Mux API calls.

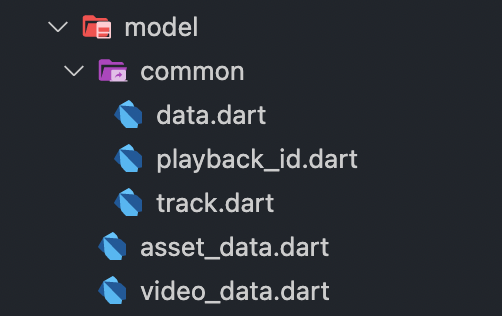

Model class

There are two main model classes that we need:

- VideoData: parses the data returned for each video file

- AssetData: parses the data returned for the list of assets (or videos)

You can define the model classes in this structure:

The VideoData class:

class VideoData {

VideoData({

this.data,

});

Data data;

factory VideoData.fromRawJson(String str) =>

VideoData.fromJson(json.decode(str));

String toRawJson() => json.encode(toJson());

factory VideoData.fromJson(Map<String, dynamic> json) => VideoData(

data: json["data"] == null ? null : Data.fromJson(json["data"]),

);

Map<String, dynamic> toJson() => {

"data": data == null ? null : data.toJson(),

};

}

The AssetData class:

class AssetData {

AssetData({

this.data,

});

List<Data> data;

factory AssetData.fromRawJson(String str) =>

AssetData.fromJson(json.decode(str));

String toRawJson() => json.encode(toJson());

factory AssetData.fromJson(Map<String, dynamic> json) => AssetData(

data: json["data"] == null

? null

: List<Data>.from(json["data"].map((x) => Data.fromJson(x))),

);

Map<String, dynamic> toJson() => {

"data": data == null

? null

: List<dynamic>.from(data.map((x) => x.toJson())),

};

}

Both of them have three common sub-classes (Data, PlaybackId and Track).

The Data class looks like this:

class Data {

Data({

this.test,

this.maxStoredFrameRate,

this.status,

this.tracks,

this.id,

this.maxStoredResolution,

this.masterAccess,

this.playbackIds,

this.createdAt,

this.duration,

this.mp4Support,

this.aspectRatio,

});

bool test;

double maxStoredFrameRate;

String status;

List<Track> tracks;

String id;

String maxStoredResolution;

String masterAccess;

List<PlaybackId> playbackIds;

String createdAt;

double duration;

String mp4Support;

String aspectRatio;

factory Data.fromRawJson(String str) => Data.fromJson(json.decode(str));

String toRawJson() => json.encode(toJson());

factory Data.fromJson(Map<String, dynamic> json) => Data(

test: json["test"] == null ? null : json["test"],

maxStoredFrameRate: json["max_stored_frame_rate"] == null

? null

: json["max_stored_frame_rate"].toDouble(),

status: json["status"] == null ? null : json["status"],

tracks: json["tracks"] == null

? null

: List<Track>.from(json["tracks"].map((x) => Track.fromJson(x))),

id: json["id"] == null ? null : json["id"],

maxStoredResolution: json["max_stored_resolution"] == null

? null

: json["max_stored_resolution"],

masterAccess:

json["master_access"] == null ? null : json["master_access"],

playbackIds: json["playback_ids"] == null

? null

: List<PlaybackId>.from(

json["playback_ids"].map((x) => PlaybackId.fromJson(x))),

createdAt: json["created_at"] == null ? null : json["created_at"],

duration: json["duration"] == null ? null : json["duration"].toDouble(),

mp4Support: json["mp4_support"] == null ? null : json["mp4_support"],

aspectRatio: json["aspect_ratio"] == null ? null : json["aspect_ratio"],

);

Map<String, dynamic> toJson() => {

"test": test == null ? null : test,

"max_stored_frame_rate":

maxStoredFrameRate == null ? null : maxStoredFrameRate,

"status": status == null ? null : status,

"tracks": tracks == null

? null

: List<dynamic>.from(tracks.map((x) => x.toJson())),

"id": id == null ? null : id,

"max_stored_resolution":

maxStoredResolution == null ? null : maxStoredResolution,

"master_access": masterAccess == null ? null : masterAccess,

"playback_ids": playbackIds == null

? null

: List<dynamic>.from(playbackIds.map((x) => x.toJson())),

"created_at": createdAt == null ? null : createdAt,

"duration": duration == null ? null : duration,

"mp4_support": mp4Support == null ? null : mp4Support,

"aspect_ratio": aspectRatio == null ? null : aspectRatio,

};

}

PlaybackId is given below:

class PlaybackId {

PlaybackId({

this.policy,

this.id,

});

String policy;

String id;

factory PlaybackId.fromRawJson(String str) =>

PlaybackId.fromJson(json.decode(str));

String toRawJson() => json.encode(toJson());

factory PlaybackId.fromJson(Map<String, dynamic> json) => PlaybackId(

policy: json["policy"] == null ? null : json["policy"],

id: json["id"] == null ? null : json["id"],

);

Map<String, dynamic> toJson() => {

"policy": policy == null ? null : policy,

"id": id == null ? null : id,

};

}

The Track class is as follows:

class Track {

Track({

this.maxWidth,

this.type,

this.id,

this.duration,

this.maxFrameRate,

this.maxHeight,

this.maxChannelLayout,

this.maxChannels,

});

int maxWidth;

String type;

String id;

double duration;

double maxFrameRate;

int maxHeight;

String maxChannelLayout;

int maxChannels;

factory Track.fromRawJson(String str) => Track.fromJson(json.decode(str));

String toRawJson() => json.encode(toJson());

factory Track.fromJson(Map<String, dynamic> json) => Track(

maxWidth: json["max_width"] == null ? null : json["max_width"],

type: json["type"] == null ? null : json["type"],

id: json["id"] == null ? null : json["id"],

duration: json["duration"] == null ? null : json["duration"].toDouble(),

maxFrameRate: json["max_frame_rate"] == null

? null

: json["max_frame_rate"].toDouble(),

maxHeight: json["max_height"] == null ? null : json["max_height"],

maxChannelLayout: json["max_channel_layout"] == null

? null

: json["max_channel_layout"],

maxChannels: json["max_channels"] == null ? null : json["max_channels"],

);

Map<String, dynamic> toJson() => {

"max_width": maxWidth == null ? null : maxWidth,

"type": type == null ? null : type,

"id": id == null ? null : id,

"duration": duration == null ? null : duration,

"max_frame_rate": maxFrameRate == null ? null : maxFrameRate,

"max_height": maxHeight == null ? null : maxHeight,

"max_channel_layout":

maxChannelLayout == null ? null : maxChannelLayout,

"max_channels": maxChannels == null ? null : maxChannels,

};

}

HomePage

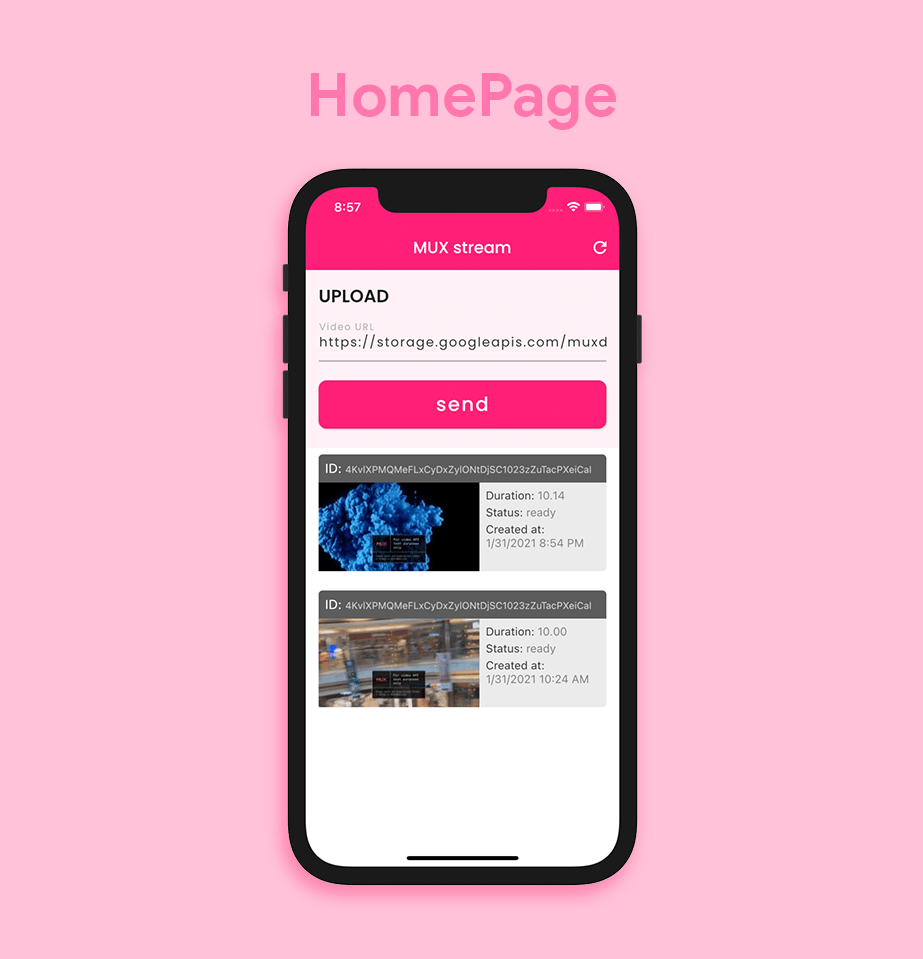

The HomePage will contain a TextField for taking the video URL as input and a button for uploading it to Mux. It also displays the list of all video assets present on Mux.

The HomePage will be a StatefulWidget. First, we will call the initializeDio() method and initialize a TextEditingController and a FocusNode inside the initState() method.

class HomePage extends StatefulWidget {

@override

_HomePageState createState() => _HomePageState();

}

class _HomePageState extends State<HomePage> {

MUXClient _muxClient = MUXClient();

TextEditingController _textControllerVideoURL;

FocusNode _textFocusNodeVideoURL;

@override

void initState() {

super.initState();

_muxClient.initializeDio();

_textControllerVideoURL = TextEditingController(text:demoVideoUrl);

_textFocusNodeVideoURL = FocusNode();

}

@override

Widget build(BuildContext context) {

return Container();

}

}

Let’s add a TextField and a RaisedButton for storing the video on Mux. The button will be replaced by a Text widget and a CircularProgressIndicator while the storage is in progress.

class _HomePageState extends State<HomePage> {

bool isProcessing = false;

// ...

@override

Widget build(BuildContext context) {

return GestureDetector(

onTap: () {

_textFocusNodeVideoURL.unfocus();

},

child: Scaffold(

backgroundColor: Colors.white,

appBar: AppBar(

elevation: 0,

brightness: Brightness.dark,

title: Text('Mux stream'),

backgroundColor: CustomColors.muxPink,

actions: [

IconButton(

icon: Icon(Icons.refresh),

onPressed: () {

setState(() {});

},

),

],

),

body: Column(

mainAxisAlignment: MainAxisAlignment.start,

children: [

Container(

color: CustomColors.muxPink.withOpacity(0.06),

child: Padding(

padding: const EdgeInsets.only(

top: 16.0,

left: 16.0,

right: 16.0,

bottom: 24.0,

),

child: Column(

crossAxisAlignment: CrossAxisAlignment.start,

children: [

Text(

'UPLOAD',

style: TextStyle(

fontWeight: FontWeight.w600,

fontSize: 22.0,

),

),

TextField(

focusNode: _textFocusNodeVideoURL,

keyboardType: TextInputType.url,

textInputAction: TextInputAction.done,

style: TextStyle(

color: CustomColors.muxGray,

fontSize: 16.0,

letterSpacing: 1.5,

),

controller: _textControllerVideoURL,

cursorColor: CustomColors.muxPinkLight,

autofocus: false,

onSubmitted: (value) {

_textFocusNodeVideoURL.unfocus();

},

decoration: InputDecoration(

focusedBorder: UnderlineInputBorder(

borderSide: BorderSide(

color: CustomColors.muxPink,

width: 2,

),

),

enabledBorder: UnderlineInputBorder(

borderSide: BorderSide(

color: Colors.black26,

width: 2,

),

),

labelText: 'Video URL',

labelStyle: TextStyle(

color: Colors.black26,

fontWeight: FontWeight.w500,

fontSize: 16,

),

hintText: 'Enter the URL of the video to upload',

hintStyle: TextStyle(

color: Colors.black12,

fontSize: 12.0,

letterSpacing: 2,

),

),

),

isProcessing

? Padding(

padding: const EdgeInsets.only(top: 24.0),

child: Row(

mainAxisAlignment: MainAxisAlignment.spaceBetween,

children: [

Text(

'Processing . . .',

style: TextStyle(

color: CustomColors.muxPink,

fontSize: 16.0,

fontWeight: FontWeight.w500,

letterSpacing: 1.5,

),

),

CircularProgressIndicator(

valueColor: AlwaysStoppedAnimation<Color>(

CustomColors.muxPink,

),

strokeWidth: 2,

)

],

),

)

: Padding(

padding: const EdgeInsets.only(top: 24.0),

child: Container(

width: double.maxFinite,

child: RaisedButton(

color: CustomColors.muxPink,

onPressed: () async {

setState(() {

isProcessing = true;

});

await _muxClient.storeVideo(

videoUrl: _textControllerVideoURL.text);

setState(() {

isProcessing = false;

});

},

shape: RoundedRectangleBorder(

borderRadius: BorderRadius.circular(10),

),

child: Padding(

padding: EdgeInsets.only(

top: 12.0,

bottom: 12.0,

),

child: Text(

'send',

style: TextStyle(

fontSize: 24,

fontWeight: FontWeight.w500,

color: Colors.white,

letterSpacing: 2,

),

),

),

),

),

),

],

),

),

),

// ...

],

),

),

);

}

}

For displaying the list of all videos, you can add a ListView widget to the Column:

Expanded(

child: FutureBuilder<AssetData>(

future: _muxClient.getAssetList(),

builder: (context, snapshot) {

if (snapshot.hasData) {

AssetData assetData = snapshot.data;

int length = assetData.data.length;

return ListView.separated(

physics: BouncingScrollPhysics(),

itemCount: length,

itemBuilder: (context, index) {

DateTime dateTime = DateTime.fromMillisecondsSinceEpoch(

int.parse(assetData.data[index].createdAt) * 1000);

DateFormat formatter = DateFormat.yMd().add_jm();

String dateTimeString = formatter.format(dateTime);

String currentStatus = assetData.data[index].status;

bool isReady = currentStatus == 'ready';

String playbackId = isReady

? assetData.data[index].playbackIds[0].id

: null;

String thumbnailURL = isReady

? '$muxImageBaseUrl/$playbackId/$imageTypeSize'

: null;

return VideoTile(

assetData: assetData.data[index],

thumbnailUrl: thumbnailURL,

isReady: isReady,

dateTimeString: dateTimeString,

);

},

separatorBuilder: (_, __) => SizedBox(

height: 16.0,

),

);

}

return Container(

child: Text(

'No videos present',

style: TextStyle(

color: Colors.black45,

),

),

);

},

),

)

Here, we have parsed and formatted the DateTime object to display as a proper String. The thumbnail URL is created by using the playback ID and specifying the image type and size. The VideoTile widget is used for generating the UI for each list item. The class looks like this:

class VideoTile extends StatelessWidget {

final Data assetData;

final String thumbnailUrl;

final String dateTimeString;

final bool isReady;

VideoTile({

@required this.assetData,

@required this.thumbnailUrl,

@required this.dateTimeString,

@required this.isReady,

});

@override

Widget build(BuildContext context) {

return Padding(

padding: const EdgeInsets.only(

left: 16.0,

right: 16.0,

top: 8.0,

),

child: InkWell(

onTap: () {

Navigator.of(context).push(

MaterialPageRoute(

builder: (context) => PreviewPage(

assetData: assetData,

),

),

);

},

child: Container(

decoration: BoxDecoration(

color: CustomColors.muxGray.withOpacity(0.1),

borderRadius: BorderRadius.all(

Radius.circular(5.0),

),

),

child: Column(

crossAxisAlignment: CrossAxisAlignment.start,

children: [

Container(

width: double.maxFinite,

decoration: BoxDecoration(

color: CustomColors.muxGray.withOpacity(0.8),

borderRadius: BorderRadius.only(

topLeft: Radius.circular(5.0),

topRight: Radius.circular(5.0),

),

),

child: Padding(

padding: const EdgeInsets.only(

left: 8.0,

top: 8.0,

bottom: 8.0,

),

child: RichText(

maxLines: 1,

softWrap: false,

overflow: TextOverflow.fade,

text: TextSpan(

text: 'ID: ',

style: TextStyle(

color: Colors.white,

fontSize: 16.0,

),

children: [

TextSpan(

text: assetData.id,

style: TextStyle(

fontSize: 12.0,

color: Colors.white70,

),

)

],

),

),

),

),

Row(

crossAxisAlignment: CrossAxisAlignment.start,

children: [

isReady

? Image.network(

thumbnailUrl,

cacheWidth: 200,

cacheHeight: 110,

)

: Flexible(

child: AspectRatio(

aspectRatio: 16 / 9,

child: Container(

width: 200,

// height: 110,

color: Colors.black87,

),

),

),

Flexible(

child: Padding(

padding: const EdgeInsets.only(

left: 8.0,

top: 8.0,

),

child: Column(

mainAxisSize: MainAxisSize.min,

crossAxisAlignment: CrossAxisAlignment.start,

children: [

RichText(

maxLines: 1,

softWrap: false,

overflow: TextOverflow.fade,

text: TextSpan(

text: 'Duration: ',

style: TextStyle(

color: CustomColors.muxGray,

fontSize: 14.0,

),

children: [

TextSpan(

text: assetData.duration == null

? 'N/A'

: assetData.duration.toStringAsFixed(2),

style: TextStyle(

// fontSize: 12.0,

color:

CustomColors.muxGray.withOpacity(0.6),

),

)

],

),

),

SizedBox(height: 4.0),

RichText(

maxLines: 1,

softWrap: false,

overflow: TextOverflow.fade,

text: TextSpan(

text: 'Status: ',

style: TextStyle(

color: CustomColors.muxGray,

fontSize: 14.0,

),

children: [

TextSpan(

text: assetData.status,

style: TextStyle(

// fontSize: 12.0,

color:

CustomColors.muxGray.withOpacity(0.6),

),

)

],

),

),

SizedBox(height: 4.0),

RichText(

maxLines: 2,

overflow: TextOverflow.fade,

text: TextSpan(

text: 'Created at: ',

style: TextStyle(

color: CustomColors.muxGray,

fontSize: 14.0,

),

children: [

TextSpan(

text: '\n$dateTimeString',

style: TextStyle(

// fontSize: 12.0,

color:

CustomColors.muxGray.withOpacity(0.6),

),

)

],

),

),

],

),

),

),

],

),

],

),

),

),

);

}

}

Each of the tiles is wrapped in an InkWell widget that has an onTap method for navigating to the PreviewPage, passing the asset data present at that index.

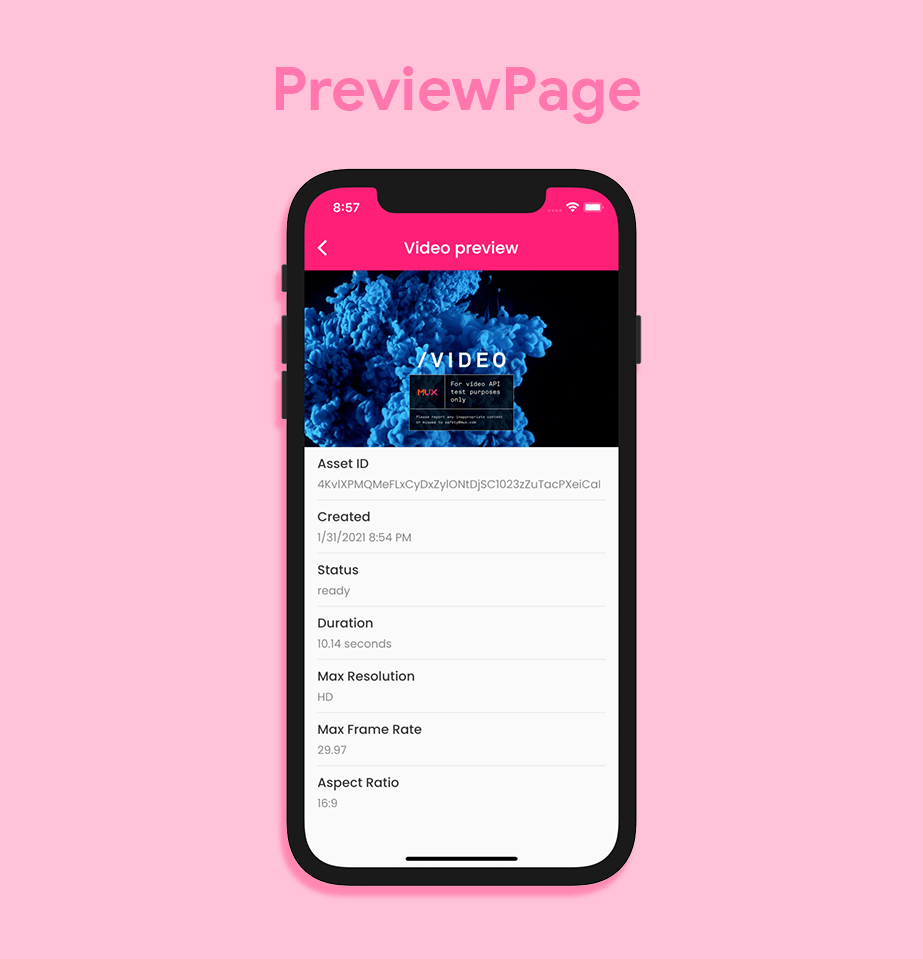

PreviewPage

This page will be used for viewing the video using the stream URL and for showing some information related to the video file.

There will be a video player at the top of the page, followed by a list of Text widgets for displaying all of the information.

We have to create a VideoPlayerController and initialize it inside the initState() of this class.

class PreviewPage extends StatefulWidget {

final Data assetData;

const PreviewPage({@required this.assetData});

@override

_PreviewPageState createState() => _PreviewPageState();

}

class _PreviewPageState extends State<PreviewPage> {

VideoPlayerController _controller;

Data assetData;

String dateTimeString;

@override

void initState() {

super.initState();

assetData = widget.assetData;

String playbackId = assetData.playbackIds[0].id;

DateTime dateTime = DateTime.fromMillisecondsSinceEpoch(

int.parse(assetData.createdAt) * 1000);

DateFormat formatter = DateFormat.yMd().add_jm();

dateTimeString = formatter.format(dateTime);

_controller = VideoPlayerController.network(

'$muxStreamBaseUrl/$playbackId.$videoExtension')

..initialize().then((_) {

setState(() {});

});

_controller.play();

}

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

elevation: 0,

brightness: Brightness.dark,

title: Text('Video preview'),

backgroundColor: CustomColors.muxPink,

),

body: SingleChildScrollView(

physics: BouncingScrollPhysics(),

child: Column(

mainAxisAlignment: MainAxisAlignment.start,

crossAxisAlignment: CrossAxisAlignment.start,

children: [

// Add the widgets here

],

),

),

);

}

}

I have used the Mux stream URL for loading and initializing the video controller. Also, _controller.play() is used to start playing the video as soon as it is initialized.

The video player is created inside the Column using the following:

Stack(

children: [

AspectRatio(

aspectRatio: 16 / 9,

child: Container(

color: Colors.black,

width: double.maxFinite,

child: Center(

child: CircularProgressIndicator(

valueColor: AlwaysStoppedAnimation<Color>(

CustomColors.muxPink,

),

strokeWidth: 2,

),

),

),

),

_controller.value.initialized

? AspectRatio(

aspectRatio: _controller.value.aspectRatio,

child: VideoPlayer(_controller),

)

: AspectRatio(

aspectRatio: 16 / 9,

child: Container(

color: Colors.black,

width: double.maxFinite,

child: Center(

child: CircularProgressIndicator(

valueColor: AlwaysStoppedAnimation<Color>(

CustomColors.muxPink,

),

strokeWidth: 2,

),

),

),

),

],

),

For displaying the information, we can add this to the Column after the video player widget:

Padding(

padding: const EdgeInsets.only(top: 8.0, left: 16.0, right: 16.0),

child: Column(

mainAxisSize: MainAxisSize.max,

mainAxisAlignment: MainAxisAlignment.start,

crossAxisAlignment: CrossAxisAlignment.start,

children: [

InfoTile(

name: 'Asset ID',

data: assetData.id,

),

InfoTile(

name: 'Created',

data: dateTimeString,

),

InfoTile(

name: 'Status',

data: assetData.status,

),

InfoTile(

name: 'Duration',

data: '${assetData.duration.toStringAsFixed(2)} seconds',

),

InfoTile(

name: 'Max Resolution',

data: assetData.maxStoredResolution,

),

InfoTile(

name: 'Max Frame Rate',

data: assetData.maxStoredFrameRate.toString(),

),

InfoTile(

name: 'Aspect Ratio',

data: assetData.aspectRatio,

),

SizedBox(height: 8.0),

],

),

)

The InfoTile widget is used for creating the UI of each item in the Column:

class InfoTile extends StatelessWidget {

final String name;

final String data;

InfoTile({

@required this.name,

@required this.data,

});

@override

Widget build(BuildContext context) {

return Column(

crossAxisAlignment: CrossAxisAlignment.start,

children: [

Text(

name,

style: TextStyle(

color: CustomColors.muxGray,

fontSize: 16.0,

fontWeight: FontWeight.w500,

),

),

SizedBox(height: 4.0),

Text(

data,

style: TextStyle(

color: CustomColors.muxGray.withOpacity(0.6),

fontSize: 14.0,

fontWeight: FontWeight.w400,

),

),

SizedBox(height: 8.0),

],

);

}

}

Congratulations, you have created a Flutter app for streaming videos.

Testing of the video streaming app built with Flutter and Mux

After you have built a video streaming app with Flutter and Mux, it’s time to test it.

Verifying whether you are getting a correct response from an API call while using a third-party service is very important. We will create some tests to mock the API responses and verify whether they are being correctly parsed.

You can use the plugin http_mock_adapter, which is created with a combination of dio and mockito. It is very effective when used alongside the dio plugin.

We will not go deep into the tests in this article. First of all, create a new file inside the test folder called mux_api_test.dart.

Let’s define three tests here:

main() {

Dio dio;

group('MUX API mock tests', () {

DioAdapter dioAdapter;

MUXClient muxClient;

setUpAll(() {

dio = Dio();

dioAdapter = DioAdapter();

dio.httpClientAdapter = dioAdapter;

muxClient = MUXClient();

muxClient.initializeDio();

});

test('GET videos', () async {

const path = '$muxServerUrl/assets';

String testJsonResponse =

'''{"data" : [{"playback_ids" : [{"policy" : "public", "id" : "9pWt97lvZeW02LOhYpaGiKPN01XvOaK6K15QClHrVxUqs"}], "status" : "ready", "id" : "tOY008DPRz9jB5r01DpOIfKxlspNocMsDUmK3iodCMEZ00", "created_at" : "1612112341",},]}''';

dio.httpClientAdapter = dioAdapter;

dioAdapter.onGet(path).reply(200, testJsonResponse);

final onGetResponse = await dio.get(path);

expect(await muxClient.getAssetList(), isA<AssetData>());

expect(onGetResponse.data, testJsonResponse);

});

test('GET status', () async {

const String videoId = 'QsaseZLbz01n3hv5mJL1sB6TnB8MT3mL7CfiAhaY02MIk';

const path = '/asset';

String testJsonResponse =

'''{"data": {"status": "ready", "playback_ids": [{"policy": "public", "id": "tcSCm5mqYxI1Rok602o8yKJQb001zOMvFb4bW61lKSzqE"}], "id": "BIJ95sTJnI4RMwm57GTBWA00WUoZYkQdwjKPqnNAxwi00", "created_at": "1612129368"}}''';

dio.httpClientAdapter = dioAdapter;

dioAdapter.onGet(path).reply(200, testJsonResponse);

final onGetResponse = await dio.get(path);

expect(

await muxClient.checkPostStatus(videoId: videoId), isA<VideoData>());

expect(onGetResponse.data, testJsonResponse);

});

test('POST a video', () async {

const path = '$muxServerUrl/assets';

String testJsonResponse =

'''{"data": {"status": "preparing", "playback_ids": [{"policy": "public", "id": "tcSCm5mqYxI1Rok602o8yKJQb001zOMvFb4bW61lKSzqE"}], "id": "BIJ95sTJnI4RMwm57GTBWA00WUoZYkQdwjKPqnNAxwi00", "created_at": "1612129368"}}''';

dio.httpClientAdapter = dioAdapter;

dioAdapter.onPost(

path,

data: {

"input": demoVideoUrl,

"playback_policy": playbackPolicy,

},

).reply(200, testJsonResponse);

final onPostResponse = await dio.post(

path,

data: {

"input": demoVideoUrl,

"playback_policy": playbackPolicy,

},

);

expect(

await muxClient.storeVideo(videoUrl: demoVideoUrl), isA<VideoData>());

expect(onPostResponse.data, testJsonResponse);

});

});

}

You can run all the tests by using the command:

flutter test

Running on Codemagic

To maintain a productive development workflow, you should always run tests on a CI/CD service. You can set up and run tests on Codemagic in just a few minutes. To get started with Codemagic CI/CD, check out the blog post here.

Conclusion

This article covers all the aspects related to integrating Mux with Flutter and using it to stream videos, but while implementing it, you will also need a place to store the videos. Firebase Storage is a good option for this, and it works seamlessly with Mux by simply passing the URL of the video file. Don’t forget to explore the Mux API, as it provides many more customizations that you can apply while streaming a video.

References

- GitHub repo of the sample app

- Mux Docs

- [Article] Simulating HTTP request/response workflow for effective testing in Dart/Flutter via http-mock-adapter

- Codemagic Testing Docs

Souvik Biswas is a passionate Mobile App Developer (Android and Flutter). He has worked on a number of mobile apps throughout his journey, and he loves open source contribution on GitHub. He is currently pursuing a B.Tech degree in Computer Science and Engineering from Indian Institute of Information Technology Kalyani. He also writes Flutter articles on Medium - Flutter Community.