Written by Souvik Biswas

Usually, any task related to machine learning is taken care of by a separate team that has ML expertise. But if you are an individual developer or have a small team and want to integrate some cool machine learning functionality into your mobile app, then ML Kit comes to the rescue. It provides easy-to-use APIs for various machine learning models that run on-device without any internet connection.

In this article, you will learn how to use ML Kit to recognize text in your Flutter app.

Here is a brief synopsis of the key topics we are going to cover:

- What is ML Kit?

- Accessing the device camera from the app

- Using ML Kit to recognize text

- Identifying email addresses from images

- Using the CustomPaint widget to mark the detected text

So, let’s get started, but first, let us know what’s your relationship with CI/CD tools?

What is ML Kit?

ML Kit is a package created by Google’s machine learning experts for mobile app developers. It uses on-device machine learning, which helps with fast and real-time processing. Also, it works totally offline, so you don’t have to worry about any privacy violations.

NOTE: The ML Kit plugin for Flutter (google_ml_kit) is under development and is only available for the Android platform.

There is a package called firebase_ml_vision that has similar functionality. It provides a cloud-based ML solution that connects to Firebase and supports both the Android and iOS platforms. Unfortunately, it has been discontinued since these APIs are no longer available in the latest Firebase SDK. However, it is still functional, so if you want to use it, check out the article here.

Create a new Flutter project

Use the following command to create a new Flutter project:

flutter create flutter_mlkit_visionNow, open the project using your favorite IDE.

To open the project with VS Code, you can use the following command:

code flutter_mlkit_vision

Overview

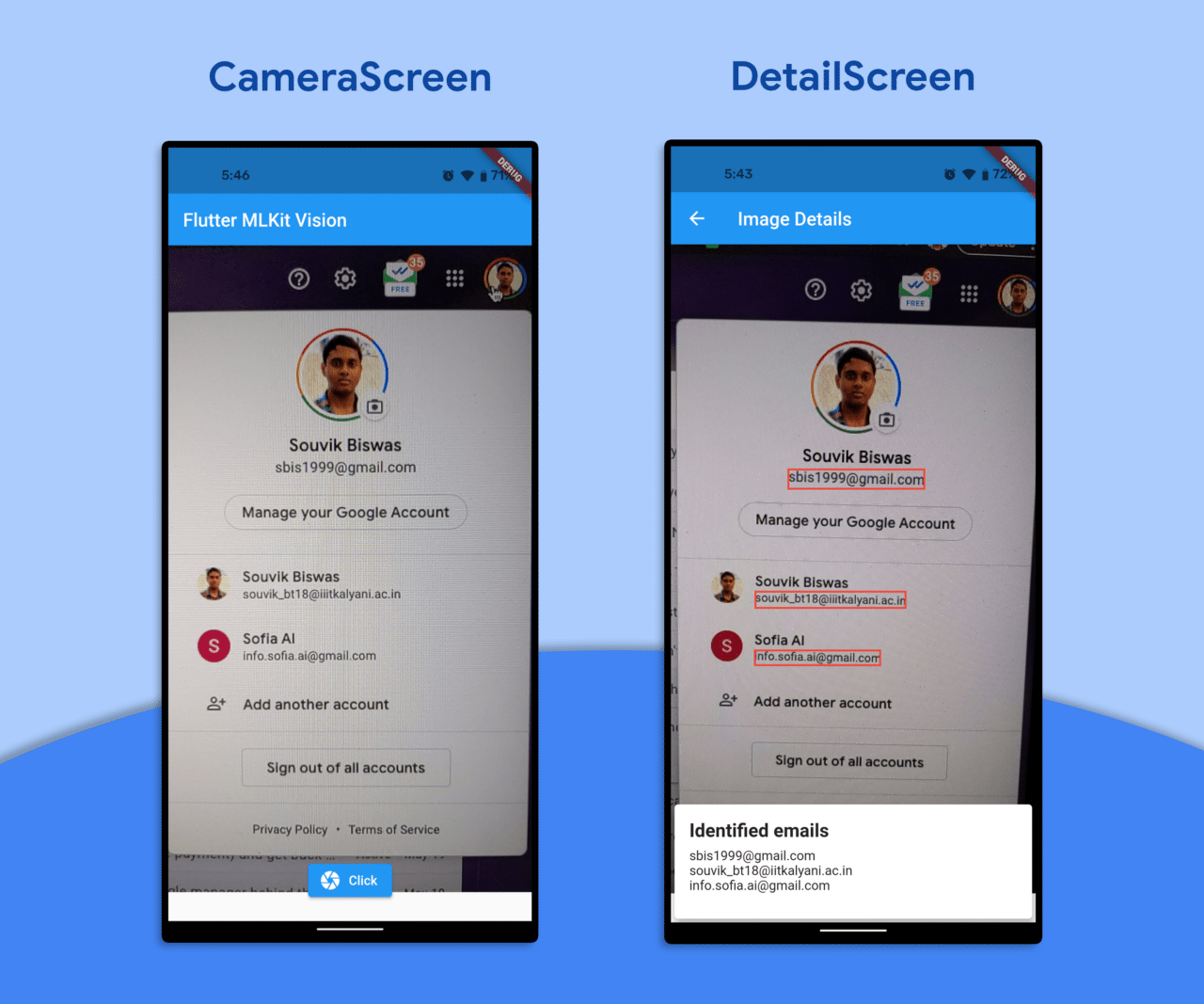

The Flutter app that we are going to build will mainly consist of two screens:

CameraScreen: This screen will only consist of the camera view and a button to take a picture.

DetailScreen: This screen will show the image details by identifying the text from the picture.

App screenshots

The final app will look like this:

Accessing device camera

To use the device’s camera in your Flutter app, you will need a plugin called camera.

Add the plugin to your pubspec.yaml file:

camera: ^0.8.1

For the latest version of the plugin, refer to pub.dev

Remove the demo counter app code present in the main.dart file. Replace it with the following code:

import 'package:camera/camera.dart';

import 'package:flutter/material.dart';

import 'camera_screen.dart';

// Global variable for storing the list of cameras available

List<CameraDescription> cameras = [];

Future<void> main() async {

// Fetch the available cameras before initializing the app

try {

WidgetsFlutterBinding.ensureInitialized();

cameras = await availableCameras();

} on CameraException catch (e) {

debugPrint('CameraError: ${e.description}');

}

runApp(MyApp());

}

class MyApp extends StatelessWidget {

@override

Widget build(BuildContext context) {

return MaterialApp(

title: 'Flutter MLKit Vision',

theme: ThemeData(

primarySwatch: Colors.blue,

),

home: CameraScreen(),

);

}

}

In the above code, I have used the availableCameras() method to retrieve the list of device cameras.

Now you have to define the CameraScreen, which will show the camera preview and a button for clicking pictures.

class CameraScreen extends StatefulWidget {

@override

_CameraScreenState createState() => _CameraScreenState();

}

class _CameraScreenState extends State<CameraScreen> {

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

title: Text('Flutter MLKit Vision'),

),

body: Container(),

);

}

}

Create a

CameraControllerobject:// Inside _CameraScreenState class late final CameraController _controller;Create a method called

_initializeCamera(), and initialize the camera_controllerinside it:// Initializes camera controller to preview on screen void _initializeCamera() async { final CameraController cameraController = CameraController( cameras[0], ResolutionPreset.high, ); _controller = cameraController; _controller.initialize().then((_) { if (!mounted) { return; } setState(() {}); }); }CameraController()has two required parameters:CameraDescription: Here, you have to pass the device camera you want to access.

- 1 is for the front camera

- 0 is for the back camera

ResolutionPreset: Here, you have to pass the resolution quality of the camera image.

Call the method inside the

initState():@override void initState() { _initializeCamera(); super.initState(); }In order to prevent any memory leaks, dispose the

_controller:@override void dispose() { _controller.dispose(); super.dispose(); }Now, let’s define a method called

_takePicture()for taking a picture and saving it to the file system. The method will return the path of the saved image file.// Takes picture with the selected device camera, and // returns the image path Future<String?> _takePicture() async { if (!_controller.value.isInitialized) { print("Controller is not initialized"); return null; } String? imagePath; if (_controller.value.isTakingPicture) { print("Processing is in progress..."); return null; } try { // Turning off the camera flash _controller.setFlashMode(FlashMode.off); // Returns the image in cross-platform file abstraction final XFile file = await _controller.takePicture(); // Retrieving the path imagePath = file.path; } on CameraException catch (e) { print("Camera Exception: $e"); return null; } return imagePath; }It’s time to build the UI of the

CameraScreen. The UI will consist of a Stack with the camera preview. On top of it, there will be a button for capturing pictures. Upon successful capture of the picture, it will navigate to another screen calledDetailScreen.@override Widget build(BuildContext context) { return Scaffold( appBar: AppBar( title: Text('Flutter MLKit Vision'), ), body: _controller.value.isInitialized ? Stack( children: <Widget>[ CameraPreview(_controller), Padding( padding: const EdgeInsets.all(20.0), child: Container( alignment: Alignment.bottomCenter, child: ElevatedButton.icon( icon: Icon(Icons.camera), label: Text("Click"), onPressed: () async { // If the returned path is not null, navigate // to the DetailScreen await _takePicture().then((String? path) { if (path != null) { Navigator.push( context, MaterialPageRoute( builder: (context) => DetailScreen( imagePath: path, ), ), ); } else { print('Image path not found!'); } }); }, ), ), ) ], ) : Container( color: Colors.black, child: Center( child: CircularProgressIndicator(), ), ), ); }So, you have completed adding the camera to your app. Now, you can analyze the captured images and recognize the text in them. We will perform the text recognition for the image inside the

DetailScreen.

Integrating ML Kit

Import the google_ml_kit plugin in your pubspec.yaml file:

google_ml_kit: ^0.3.0

You have to pass the path of the image file to the DetailScreen. The basic structure of the DetailScreen is defined below:

// Inside image_detail.dart file

import 'dart:async';

import 'dart:io';

import 'package:flutter/material.dart';

import 'package:google_ml_kit/google_ml_kit.dart';

class DetailScreen extends StatefulWidget {

final String imagePath;

const DetailScreen({required this.imagePath});

@override

_DetailScreenState createState() => _DetailScreenState();

}

class _DetailScreenState extends State<DetailScreen> {

late final String _imagePath;

late final TextDetector _textDetector;

Size? _imageSize;

List<TextElement> _elements = [];

List<String>? _listEmailStrings;

Future<void> _getImageSize(File imageFile) async {

// TODO: Retrieve the image size here

}

void _recognizeEmails() async {

// TODO: Initialize the text recognizer here, and

// parse the text to find email addresses in it

}

@override

void initState() {

_imagePath = widget.imagePath;

// Initializing the text detector

_textDetector = GoogleMlKit.vision.textDetector();

_recognizeEmails();

super.initState();

}

@override

void dispose() {

// Disposing the text detector when not used anymore

_textDetector.close();

super.dispose();

}

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

title: Text("Image Details"),

),

body: Container(),

);

}

}

In the above code snippet, we have initialized the text detector using Google ML Kit inside the initState() method and disposed of it inside the dispose() method. You will have to define two methods:

- _getImageSize(): For retrieving the captured image size

- _recognizeEmails(): For recognizing text and finding email addresses

Retrieving image size

Inside the _getImageSize() method, you have to first fetch the image with the help of its path and then retrieve the size from it.

// Fetching the image size from the image file

Future<void> _getImageSize(File imageFile) async {

final Completer<Size> completer = Completer<Size>();

final Image image = Image.file(imageFile);

image.image.resolve(const ImageConfiguration()).addListener(

ImageStreamListener((ImageInfo info, bool _) {

completer.complete(Size(

info.image.width.toDouble(),

info.image.height.toDouble(),

));

}),

);

final Size imageSize = await completer.future;

setState(() {

_imageSize = imageSize;

});

}

Recognizing email addresses

Inside the _recognizeEmails() method, you have to perform the whole operation of recognizing the image and getting the required data from it. Here, I will show you how to retrieve email addresses from the recognized text.

Retrieve the image file from the path, and call the

_getImageSize()method:void _recognizeEmails() async { _getImageSize(File(_imagePath)); }Create an

InputImageobject using the image path, and process it to recognize text present in it:// Creating an InputImage object using the image path final inputImage = InputImage.fromFilePath(_imagePath); // Retrieving the RecognisedText from the InputImage final text = await _textDetector.processImage(inputImage);Now, we have to retrieve the text from the

RecognisedTextobject and then separate out the email addresses from it. The text is present in blocks -> lines -> text.// Regular expression for verifying an email address String pattern = r"^[a-zA-Z0-9.!#$%&'*+/=?^_`{|}~-]+@[a-zA-Z0-9](?:[a-zA-Z0-9-]{0,253}[a-zA-Z0-9])?(?:\.[a-zA-Z0-9](?:[a-zA-Z0-9-]{0,253}[a-zA-Z0-9])?)*$"; RegExp regEx = RegExp(pattern); List<String> emailStrings = []; // Finding and storing the text String(s) for (TextBlock block in text.textBlocks) { for (TextLine line in block.textLines) { if (regEx.hasMatch(line.lineText)) { emailStrings.add(line.lineText); } } }Store the text retrieved in the form of a list to the

_listEmailStringsvariable.setState(() { _listEmailStrings = emailStrings; });

Building the UI

Now, with all the methods defined, you can build the user interface of the DetailScreen. The UI will consist of a Stack with two widgets, one for displaying the image and the other for showing the email addresses.¯

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

title: Text("Image Details"),

),

body: _imageSize != null

? Stack(

children: [

Container(

width: double.maxFinite,

color: Colors.black,

child: AspectRatio(

aspectRatio: _imageSize!.aspectRatio,

child: Image.file(

File(_imagePath),

),

),

),

Align(

alignment: Alignment.bottomCenter,

child: Card(

elevation: 8,

color: Colors.white,

child: Padding(

padding: const EdgeInsets.all(16.0),

child: Column(

mainAxisSize: MainAxisSize.min,

crossAxisAlignment: CrossAxisAlignment.start,

children: <Widget>[

Padding(

padding: const EdgeInsets.only(bottom: 8.0),

child: Text(

"Identified emails",

style: TextStyle(

fontSize: 20,

fontWeight: FontWeight.bold,

),

),

),

Container(

height: 60,

child: SingleChildScrollView(

child: _listEmailStrings != null

? ListView.builder(

shrinkWrap: true,

physics: BouncingScrollPhysics(),

itemCount: _listEmailStrings!.length,

itemBuilder: (context, index) =>

Text(_listEmailStrings![index]),

)

: Container(),

),

),

],

),

),

),

),

],

)

: Container(

color: Colors.black,

child: Center(

child: CircularProgressIndicator(),

),

),

);

}

When the _imageSize variable is null, it will display a CircularProgressIndicator.

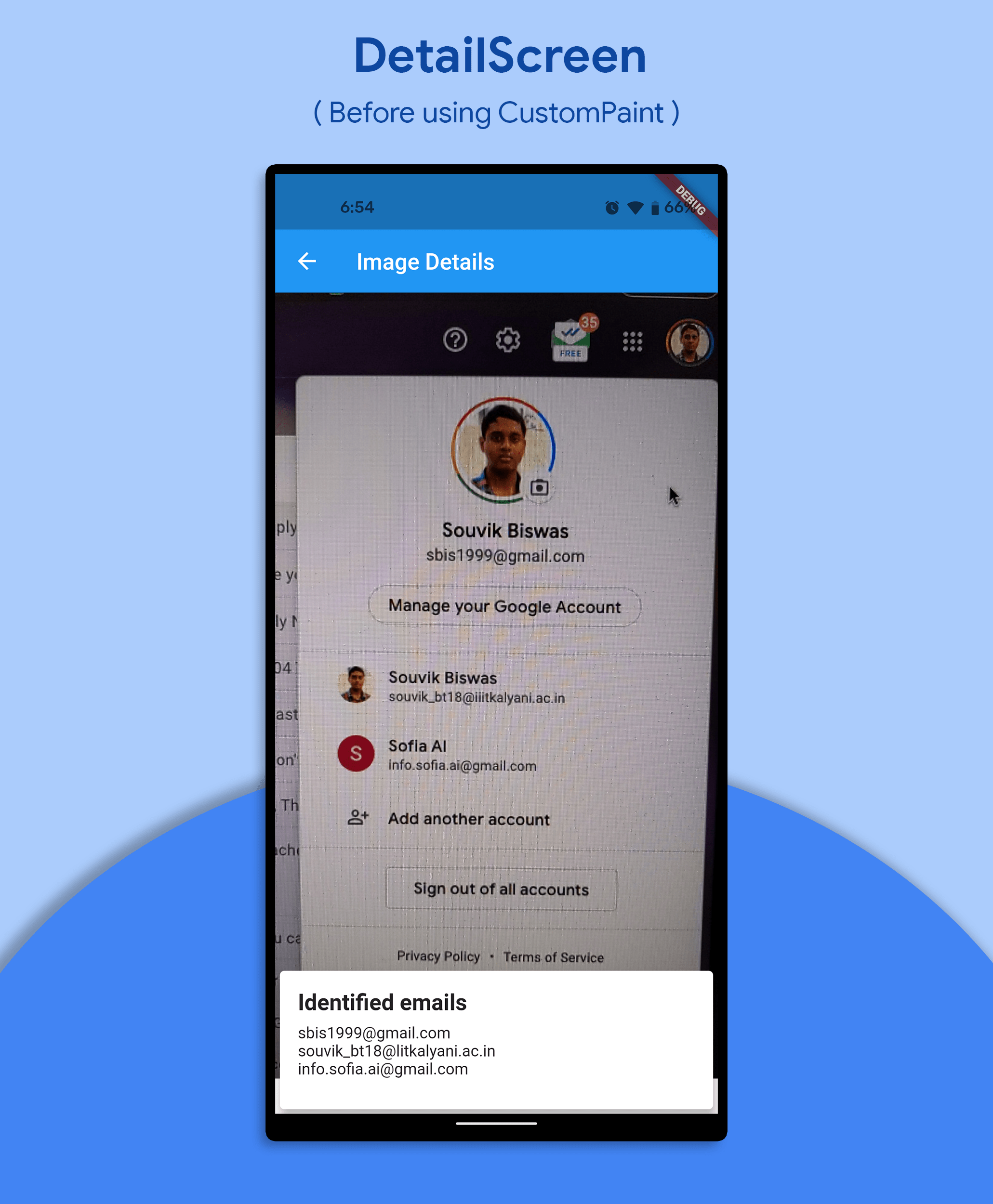

The app will look like this:

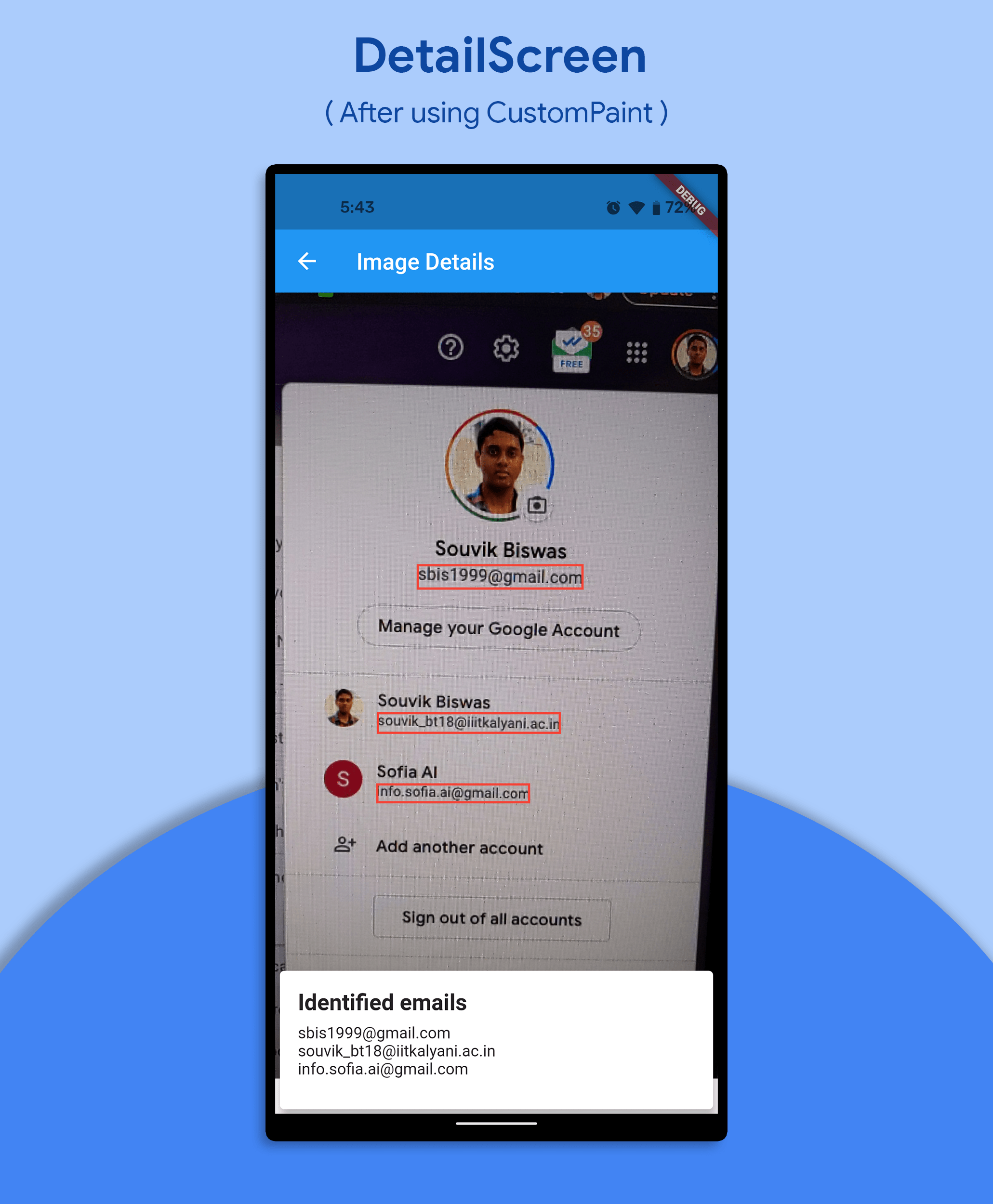

Marking the detected text

You can use the CustomPaint widget to mark the email addresses using rectangular bounding boxes.

First of all, we have to make a modification to the

_recognizeEmails()method in order to retrieve theTextElementfrom each line.List<TextElement> _elements = []; void _recognizeEmails() async { // ... // Finding and storing the text String(s) and the TextElement(s) for (TextBlock block in text.textBlocks) { for (TextLine line in block.textLines) { if (regEx.hasMatch(line.lineText)) { emailStrings.add(line.lineText); // Retrieve the elements and store them in a list for (TextElement element in line.textElements) { _elements.add(element); } } } } // ... }Wrap the

AspectRatiocontaining the image with theCustomPaintwidget.CustomPaint( foregroundPainter: TextDetectorPainter( _imageSize!, _elements, ), child: AspectRatio( aspectRatio: _imageSize!.aspectRatio, child: Image.file( File(_imagePath), ), ), ),Now, you have to define the

TextDetectorPainterclass, which will extendCustomPainter.// Helps in painting the bounding boxes around the recognized // email addresses in the picture class TextDetectorPainter extends CustomPainter { TextDetectorPainter(this.absoluteImageSize, this.elements); final Size absoluteImageSize; final List<TextElement> elements; @override void paint(Canvas canvas, Size size) { // TODO: Define painter } @override bool shouldRepaint(TextDetectorPainter oldDelegate) { return true; } }Inside the

paint()method, retrieve the size of the image display area:final double scaleX = size.width / absoluteImageSize.width; final double scaleY = size.height / absoluteImageSize.height;Define a method called

scaleRect(), which will be helpful for drawing rectangular boxes around the detected text.Rect scaleRect(TextElement container) { return Rect.fromLTRB( container.rect.left * scaleX, container.rect.top * scaleY, container.rect.right * scaleX, container.rect.bottom * scaleY, ); }Define a

Paintobject:final Paint paint = Paint() ..style = PaintingStyle.stroke ..color = Colors.red ..strokeWidth = 2.0;Use

TextElementto draw the rectangular markings:for (TextElement element in elements) { canvas.drawRect(scaleRect(element), paint); }

After adding the markings, the app will look like this:

Running the app

Before you run the app on your device, make sure that your project is properly configured.

Android

Go to project directory -> android -> app -> build.gradle, and set the minSdkVersion to 26:

minSdkVersion 26

Now you are ready to run the app on your device.

iOS

Currently, the plugin doesn’t support the iOS platform. You can track the plugin’s progress on its GitHub repo.

Conclusion

ML Kit provides fast on-device machine learning with its best-in-class machine learning models and advanced processing pipelines, which you can integrate easily with any mobile app using the API. You can use it to add many other functionalities as well, like detecting faces, identifying landmarks, scanning barcodes, labeling images, recognizing poses and more. To learn more about ML Kit, head over to their official site.

The GitHub repo of the project is available here.

Souvik Biswas is a passionate Mobile App Developer (Android and Flutter). He has worked on a number of mobile apps throughout his journey. He loves open-source contribution on GitHub. He is currently pursuing a B.Tech degree in Computer Science and Engineering from Indian Institute of Information Technology Kalyani. He also writes Flutter articles on Medium - Flutter Community.

Related posts: